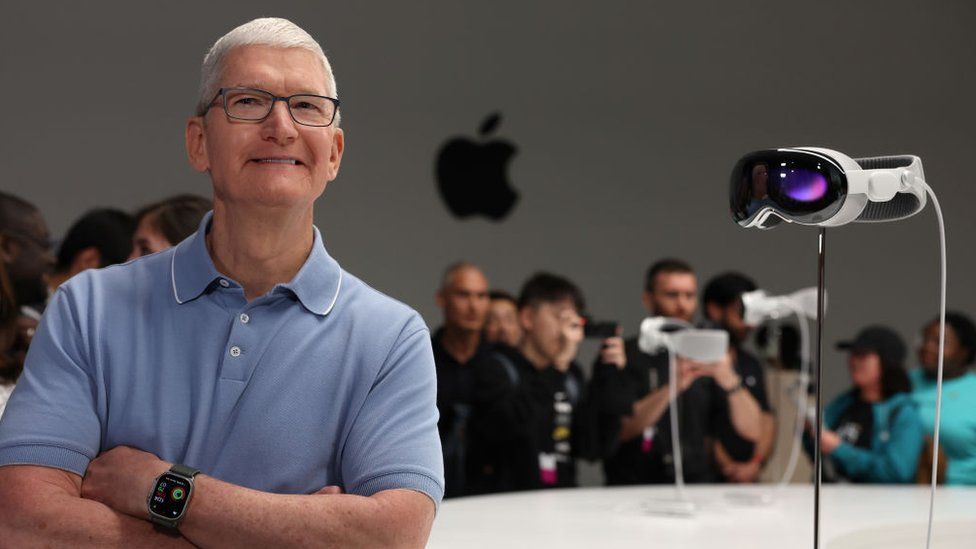

In a world where technology continually pushes the boundaries of human-computer interaction, Apple has made a game-changing move with its latest offering, the Apple Vision Pro. This isn’t just another gadget in the vast sea of tech; it’s a revolutionary tool set to redefine our interaction with the digital world. Think of it as your personal, portable 4K display that responds not just to your voice, but to the subtlest of your gestures and the direction of your gaze.

The Apple Vision Pro stands out with its array of innovative features. The device enables control through hand gestures, eye movements, and spoken commands, effectively introducing a whole new user interaction paradigm. Imagine selecting an object on your screen just by looking at it, or scrolling through content with a mere flick of your hand – the Vision Pro makes this a reality.

But what does this mean for User Experience design? Well, quite a lot.

Gesture control, the very heart of the Apple Vision Pro‘s interactivity, is a burgeoning field in UX design. The ability to control a device through natural, physical movements offers a level of intuitiveness and immersion that traditional input methods simply cannot match. It breaks down the barriers between users and technology, creating a seamless and engaging user experience that feels less like interacting with a machine and more like an extension of oneself.

As UX designers, we’re standing at the frontier of a new era in interaction design, where understanding and leveraging gesture control becomes crucial. This isn’t just about creating interfaces anymore; it’s about choreographing a digital interaction ballet that’s intuitive, efficient, and delightfully human.

Join me on this journey as we dig into the world of Apple Vision Pro and the exciting realm of gesture control. Let’s explore how this cutting-edge technology is reshaping UX design, the opportunities it presents, and the challenges we might face in harnessing its potential.

Understanding Gesture Control

First things first, let’s demystify the term ‘gesture control‘. At its core, gesture control is a form of human-computer interaction where the user navigates or manipulates digital elements using physical movements, typically hand or finger gestures. But it’s not just the definition that’s important here; it’s the impact of this technology on UX design that truly matters.

Why is gesture control so crucial in UX design? Well, the answer lies in the word ‘natural’. Gestures are an innate part of human communication. We’re biologically wired to interpret and respond to them. So, when you employ gesture control in user interfaces, you’re effectively speaking the users’ language, making the interaction more intuitive and immersive. Plus, let’s admit it – it’s just plain cool!

Now, let’s take a brief look at the evolution of gesture control. This isn’t a brand-new concept – far from it. Remember Nintendo’s Wii console? Launched back in 2006, the Wii Remote was one of the first mainstream controllers to incorporate motion sensing, allowing users to play tennis, bowl, and box in their living rooms. Then came Microsoft’s Kinect for Xbox 360, which took things a step further by allowing users to control games using full-body movements, no controller needed!

Fast forward to today, and we see gesture control not only in gaming but in a myriad of applications. Smart TVs, VR systems, even some high-end cars use gesture control for various functions. Apple’s own iPhone introduced gestures to the mass mobile market, turning pinches and swipes into second nature for millions.

However, the Apple Vision Pro takes this a step further, transforming the relatively flat plane of touchscreen interaction into a three-dimensional experience. It’s not just about swiping left or right anymore; it’s about reaching out, grabbing, pushing, pulling – a complete spatial interaction model.

Gesture control has come a long way, and with devices like Apple Vision Pro, it’s poised to leap even further. As UX designers, it’s our exciting challenge to keep pace with this evolution and design experiences that truly leverage this natural and immersive form of interaction.

Gesture Control in Apple Vision Pro

Analyzing Gesture Control Implementation

Now, we’ve established what gesture control is and how it has evolved, let’s dive into the exciting part – how Apple Vision Pro has harnessed this technology to create a user experience that’s not just functional, but also revolutionary.

Apple Vision Pro uses gesture control in a way that feels familiar yet futuristic. A simple look at an app icon selects it, a tap of your fingers together activates it, and a quick flick scrolls through its content. In essence, it allows the digital world to respond to our natural movements, blurring the line between the real and the virtual.

The Challenges in Gesture Design

However, designing for gestures, particularly in a 3D space, is not without its challenges. Recognizing gestures requires sophisticated sensors and algorithms that can interpret a wide array of possible movements. Moreover, there’s the question of how to guide users through an interface they can’t physically touch. How do you convey to a user what gestures are available, or what a particular gesture will do, without resorting to lengthy tutorials or overwhelming manuals?

Overcoming the Challenges

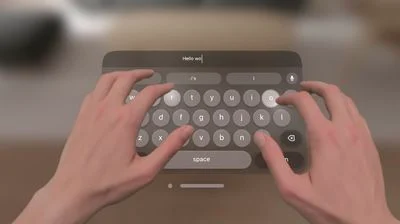

Here’s where Apple’s prowess in intuitive design shines through. Let’s examine one such example: the visionOS Virtual Keyboard. Instead of just superimposing a flat keyboard onto the user’s view, Apple made it possible to interact with the keyboard as if it were a real, tangible object in front of you. Typing is as simple as reaching out and pressing the keys, all while receiving haptic feedback as confirmation of your actions.

The Integration of Eye Movement and Voice Commands

Furthermore, Apple Vision Pro extends beyond gestures, incorporating eye movement tracking and voice commands into its interaction model. This multi-modal approach not only adds layers to the UX but also ensures accessibility, catering to users who may find one mode of interaction more comfortable or feasible than others.

Practical Applications and Implications of Gesture Control in UX Design

Adapting Traditional UI for Gesture Control

Adapting traditional UI to a gesture-based system can be challenging, as it involves shifting the paradigm from pointing-and-clicking to more nuanced, fluid movements. One interesting approach Apple has taken with Vision Pro is the adaptation of their well-established iOS layout. Despite the significant shift in interaction method, the familiar grid of icons presented in the Home View provides a sense of familiarity to the users.

Creating Immersive Experiences

Gesture control opens up a wealth of possibilities for creating immersive experiences. Consider the example of a shopping app: instead of scrolling through products, users could walk around a virtual store, picking up items to examine them, or even trying them on – all from the comfort of their own home. This high level of immersion can significantly enhance user engagement and satisfaction.

The Role of Feedback

Feedback is crucial in gesture-controlled interfaces. Users need to know when their actions have been recognized and what the result of their action was. Apple Vision Pro incorporates auditory, visual, and haptic feedback, ensuring that users always have a clear understanding of their interactions and the resulting outcomes.

The Future of Gesture Control in UX Design

The integration of gesture control in Apple Vision Pro signifies a trend in the UX design world. As technology continues to evolve, we as UX designers need to stay abreast of these changes, experimenting with and understanding these new tools to create the most intuitive and engaging experiences possible.

Conclusion: The Impact of Apple Vision Pro on UX Design

Revolutionizing UX Design

The Apple Vision Pro marks a significant milestone in the field of UX design. Its unique gesture-based control system not only represents a leap in technology but also challenges our conventional understanding of user interaction. As UX designers, it is exciting to consider the innovative design opportunities that this advancement presents.

Evolving User Expectations

With the introduction of these advanced technologies, user expectations will inevitably evolve. They will increasingly look for more intuitive, immersive, and engaging experiences. As designers, we must rise to the occasion, ensuring we harness the capabilities of these new technologies to meet and exceed these expectations.

Embracing the Future

The advent of Apple Vision Pro is a strong indication that the future of UX design lies in the realm of virtual and augmented reality. Embracing these technologies and the new interaction methods they offer is not just an exciting prospect, but a necessary one. As designers, our mission is to shape the future of human-computer interaction, making technology more accessible, intuitive, and enjoyable for everyone.